Loading...

Has scaling AI hit a wall?

04/18/2025

Summary

From LeNet to GPT-4, AI has ridden a decade-long wave of exponential scaling. But with rising costs, data limits, and diminishing returns: Has that wave finally broken?

1. Introduction

Back in 2022 when I sent my first prompt to the highly hyped app called ChatGPT I never could have imagined how dramatically the AI landscape would transform. Back then, I was fascinated by the seemingly magical ability of the model to generate text and code. Today, I find myself confronting entirely different questions: Has AI development reached its limits? Was the exponential growth of recent years just a temporary phase?

Perhaps you've also read headlines like "Has AI hit a wall?" or wondered why OpenAI suddenly offers a $2,000 plan. This development seems counterintuitive at first glance, considering how media continues to praise AI as the most revolutionary technology of our time, while figures like Sam Altman and Dario Amodei speak of a near future with AGI (Artificial General Intelligence). After countless hours studying research papers, watching famous speeches, and experimenting with various models, I want to share the remarkable history behind modern AI models in this article. The journey is characterized by surprising breakthroughs, bold financial bets, and a fundamental insight that has dominated AI development over the last decade: bigger really is better. Join me on this journey from the humble beginnings of neural networks to today's giants, and let's discover together whether the era of simple scaling is truly reaching its limits or if we're just at the beginning of an even more exciting chapter.

2. AlexNet - The origin of the scaling idea

The fundamental ideas behind modern AI breakthroughs are really old and were around for decades before their true potential was unleashed.

One of the first successful neural networks was called LeNet and was developed by Yann LeCun in 1989 at Bell Labs. Inspired by the human visual cortex, he created a convolutional neural network (CNN) architecture to classify handwritten digits. This innovative model, contained just a few parameters, almost nothing compared to todays standards. A critical limitation at that time was computing power, which was both extremly expensive and limited. Neural networks were forced to run on CPUs, which lacked the parallel processing capabilities needed to scale these architectures to their full potential.

Inspired by his earlier success, LeCun kept developing this model architecture further. In 1998, he released the fifth version of LeNet (LeNet-5) and achieved much better results. While he maintained the fundamental CNN principles, he introduced several architectural refinements and scaled the model up to 60,000 parameters, which was a considerable size for that era. Despite this progress, LeNet-5 was still constrained by the limited CPU-based computing power available at the time.

With slowly evolving hardware capabilities, the early 2000s were a period of relatively stagnant progress in neural networks. Among the research community during this time there was a widespread mindset that simple scaling could not lead to better results and the only key forward is to discover new algorithms and architectures.

In 2012, however, this mindset was proven wrong. Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton won the prestigious ImageNet Large Scale Visual Recognition Challenge (ILSVRC) with their model called AlexNet, beating the competition by a stunning margin. Their approach wasn't mainly based on novel algorithms. Instead, they scaled the existing CNN architecture to unprecedented dimensions - 60 million parameters, which was unthinkable at that time. In a later interview, Ilya Sutskever described their key realizations: that truly deep neural networks with many layers could be trained end-to-end using backpropagation, and that graphics processing units (GPUs), which until then were primarily used for gaming, could overcome the computational limitations that had restricted neural network training. For myself the biggest surprise was that another keypoint for them was that Krizhevsky developed highly optimized CUDA code which enabled training on GPUs, which revolutionized the entire field up to nowadays.

So Alexnet became the first proof of the thesis that scaling AI leads to much better results. An idea that will shape the future of AI.

3. GPT - Scaling meets Natural Language

The Transformer

Until 2017, natural language processing was primarily done with recurrent neural networks (RNNs), an architecture with a hidden state that stores information sequentially.

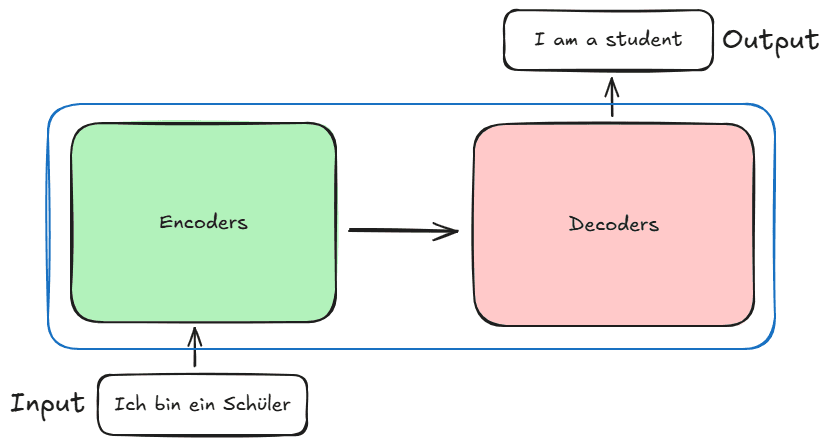

However, in 2017, a team of researchers at Google Brain and Google Research released the Transformer in their paper 'Attention is all you need' a new architecture, which was originally designed for machine translation. It consists of two parts - an encoder part which transforms text into a high dimensional space and a decoder, which uses this highdimensional representation to predict the next token.

The power of the Transformer architecture lies in several key advantages:

- It relies heavily on the self-attention mechanism, allowing it to weigh the importance of different words in context

- Unlike RNNs, it's not recurrent, making it less deep and easier to optimize

- It parallelizes extremely well, running much faster on GPUs

- It achieves better results with less computational resources

This architectural innovation laid the groundwork for all modern large language models.

GPT-1

One year later, in 2018, researchers at OpenAI released GPT-1 (Generative Pretrained Transformer), a model based only on the decoder part of the original transformer architecture. The fundamental idea was to leverage the decoder's ability to predict the next token in a sequence to generate entirely new text based on a given input. GPT-1 had around 117 million parameters, which was substantial for its time and was trained on around 40 billion tokens of text data. The team, led by Ilya Sutskever (who was part of the earlier AlexNet team), built heavily on this earlier idea that scaling leads to better results rather than focusing on complex architectural innovations. GPT-1 performed impressively for its time and scale, showing the first signs that the scaling approach which had revolutionized computer vision could also be applied successfully to natural language processing, which marked the beginning of the pretraining era in NLP.

GPT-2

Another year later, in 2019, the team at OpenAI released the next version of the GPT-family, GPT-2. Unlike its predecessor, this version wasn't released as a single model, but as a set of models with different sizes, ranging from 124 million to 1.5 billion parameters. These models maintained almost the same architecture as GPT-1 but were scaled up massively.

GPT-2 continued to validate the idea that scaling leads to better results through three key dimensions: more parameters, more and better quality data, and increased computing power. The results were striking - GPT-2 demonstrated much better performance in text generation, showed improved capabilities in performing "zero-shot" tasks (tasks it wasn't explicitly trained for), and could handle a significantly larger context window, allowing it to maintain coherence over longer texts. But despite some AI-researchers, the model didn’t gain much public recognition.

GPT-3

In 2020, OpenAI released GPT-3, a dramatically scaled-up version of their GPT architecture. Based on their research paper “Scaling Laws for Neural Language Models”, which we cover in the next chapter, the team took a massive financial risk of around 4.6 million USD to scale the model to 175 billion parameters, which is more than 100 times larger than GPT-2's largest version. They also used a vastly expanded dataset of hundreds of billions of tokens.

The results were remarkable. Beyond simply improving on existing metrics, GPT-3 developed surprisingly new abilities that hadn't been observed in smaller models. Most significantly, it demonstrated impressive few-shot learning capabilities - the ability to perform entirely new tasks after seeing just a few examples, without any parameter updates. That’s amazing if you think about it: Simply scaling the model up lead to a whole new set of capabilities!

GPT-4

With the successful release of ChatGPT in 2022, OpenAI had already demonstrated that their GPT-3 models are a great foundation for a successful cosumer product. In spring 2023, a few months after the ChatGPT-moment, they released GPT-4, their most advanced model to date, which enhanced the capabilities of ChatGPT further.

I personally haven’t noticed a big difference. But according to several powerusers the improvements were stunning. GPT-4 showed a much higher reliability on complex tasks, produced noticeably fewer hallucinations, and for the first time introduced multimodal features, allowing users to provide not just text but also images as input. This represented a significant leap forward in both capability and usability.

However, this release marked a significant shift in OpenAI's approach to transparency. Unlike previous iterations where the company published detailed research papers, GPT-4 came with nearly no information about the model and the training. This lack of transparency likely comes from OpenAI's transition from a research-focused organization to a commercial corporation. With the enormous demand of their products and increasing competition in the AI space, the company appears to have made a strategic decision to protect their intellectual property and try to protect their competitive advantage by keeping technical details secret.

4. The Scaling Laws

Like I mentioned in the history of GPT-3, one of the key reasons the researchers took this financial risk was this theory of the scaling laws. In this section, we will look closely at what these laws reveal.

Since the early days of GPT, it was clear to the researchers that model size, dataset size, and the amount of compute used to train the model are the main factors behind success. By analyzing these three factors across all the models they had trained, the researchers discovered fascinating patterns. These observations led to the publication of the "Scaling Laws for Neural Language Models" paper by OpenAI in 2020, in which they shared their findings as empirical laws.

The question driving these investigations was whether they could find patterns that would predict the performance of much larger models before actually training them. Based on these laws, they made the decision to invest in training the massive GPT-3 model.

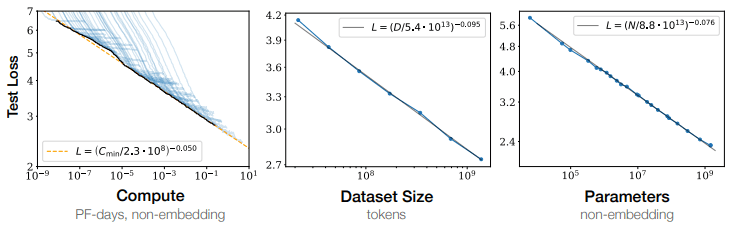

The paper revealed that model performance depends primarily on three different variables:

- The number of parameters (size of the model)

- The amount of training data (measured in tokens)

- The amount of compute used to train the model

Each of these variables correlates with model performance in a predictable way, provided it's not bottlenecked by the other variables. The performance is measured by the test loss (how accurately the model predicts the next token in a sequence - lower is better).

What makes these laws particularly powerful is that they follow surprisingly clean power-law relationships - when plotted on logarithmic scales as shown in the graphs above, they form nearly straight lines. This means that as you increase any of these factors, the improvement in performance follows a predictable mathematical pattern.

The left graph shows how performance improves with more compute. The middle graph demonstrates the relationship between dataset size and performance. The right graph illustrates how increasing parameter count affects performance.

These empirical laws pointed clearly in one direction: Scale up the model size, the training data, and the compute resources in the right proportion, and you will get predictably better models. This gave OpenAI the confidence to make the enormous investment in GPT-3, knowing with remarkable certainty what performance they could expect. In retrospect it blows my mind how predictable this progress was but at that time the scaling laws were an observation and the training of GPT-3 was still a huge risk.

5. Has Scaling hit a wall?

Despite the unreal success of the simple-scaling approach, there's growing evidence that it will eventually reach its limits. Several limitations became clear while pushing scaling further.

The most obvious limitation, which was already a big risk at GPT-3, is the cost intense training. Training costs have skyrocketed to unprecedented levels. GPT-4 for example reportedly cost over $100 million to train. This explosion in costs is driven by some key factors. First of all the demand for highend GPUs skyrocketed and with it the price, making NVIDIA, which have kind of a monopoly on AI chips, for a short time the worlds most valueable company. As new competitors started to see the potential of AI more companies started to train such models and an armsrace began, ending with a world in which the risk is real that while the companies train their new model another model can release a significantly better model, which makes the others obsolete.

While compute resources aren't technically limited by a hard wall, the economics become increasingly unfavorable as scale increases. With the widespread adoption of AI services, a significant portion of compute resources is now dedicated to serving existing models for inference rather than training new ones. This shift further constrains the resources available for scaling up models and diminishes the return on investment significantly.

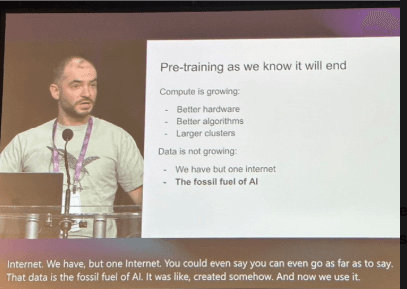

Perhaps the most fundamental limit is the availability of training data. With models like GPT-4 having already processed much of the high-quality text available on the internet, we're approaching a data wall. The supply of human-written text is limited, so you might think about simply using synthetic data for training, but the point is, using synthetic data risks leveraging existing biases and hallucinations, which may poison the entire model. According to a speech of Ilya Sutskever at NeurIPS 2024, the data are the limiting factor of the pretraining era.

Furthermore the scaling laws themselves show some critical signs: while performance continues to improve with scale, the rate of improvement decreases. The logarithmic nature of these improvements means that doubling parameters or training data yields smaller and smaller gains, that’s a mathematical reality that can't be overcome.

Another soft but curcial limit is that the focus on test loss optimization has become increasingly disconnected from real-world-useability. The earlier measurement by the test loss and several benchmarks doesn’t match with the applications used in real life which lead to a fundamental question: Why do we put so many resources into getting the best score at a benchmark even if it’s not better in real-world-tasks

The scaling paradigm hasn't hit a hard technical wall, but rather an economic and practical one. As AI transitions from research to product, the pure scaling approach is giving way to a more nuanced strategy that balances size with efficiency, quality, and practical utility.

6. Scaling Will Change

So, has scaling come to an end? Far from it. The AI research landscape is continuously evolving, finding new paths forward even as traditional scaling approaches face limitations. What we're witnessing isn't the end of scaling but its transformation.

It's important to remember that we've primarily discussed the pretraining phase of model development. Modern AI systems undergo multiple stages of training: pre-training, supervised fine-tuning, and RLHF (Reinforcement Learning from Human Feedback). Each stage presents unique opportunities for innovation and optimization.

In my opinition one of the most promising approaches is multimodal learning, which means to include images, audio and video into the dataset. This approach could shift the existing limits and lead to a more general mode, which could understand the real word in a deeper way. The challenge, however, is computational. Processing video data, in particular, requires significantly more resources than text alone, but it has the potential to reshape the AI-word again.

Another innovative direction is shifting scaling efforts from training to inference. Rather than just building larger models, researchers are developing techniques that allow models to spend more time and compute to “think” about a problem. This approach leads to incredible new capabiities of the models like performing impressive reasoning steps and verifying their outputs. OpenAI's o1/o3/o4 models showcase the potential of this approach, demonstrating remarkable new capabilities through extended inference-time computation rather than just raw parameter counts.

These approaches allow for effective scaling without the linear increase in computational requirements.

The scaling paradigm isn't ending much more it's evolving. The fundamental insight that larger models with more data and computation lead to better performance remains valid, but the implementation is becoming more sophisticated and nuanced.

As models reach human-level performance in various domains, the focus shifts from raw capability to reliability, efficiency, and specialized expertise. The future of AI scaling may not be measured simply in parameter counts but in the intelligent orchestration of computational resources across training, fine-tuning, and inference.

The scaling revolution however hasn't hit a wall - it's just taking a more interesting and complex path forward. As new innovations and techniques emerge, many of these ideas will be improved by scaling them up.

7. Conclusion

In my opinion the story of scaling in artificial intelligence represents one of the most remarkable chapters in the history of technology. What began in 2012 with the fusion of GPUs and deep neural nets has evolved through the transformer revolution and led into a new era of models with stunning capabilities. Throughout this journey, one fundamental principle has remained consistent: scaling works!

However, as AI transitions from kind of a research project for specalized applications to a general costumer technology, the economics of scaling has changed fundamentally. Companies must balance their development between capability and financial viability. This shift doesn't mark the end of the scaling paradigm, but rather marks a transformation into a more nuanced approach.

The scaling era created an industry where developing frontier models requires enormous capital investment, often hundreds of millions of dollars. Yet the revenue that sustains these companies comes primarily from inference by serving these models to users. This economic reality has naturally shifted priorities toward efficiency and optimization rather than simply creating the largest possible base models.

This doesn't mean the scaling era has ended. Rather it shifts to a more measured and multidimensional way than the exponential growth we saw between GPT-1 and GPT-4. Scaling now means not just to add more parameters and data but to focus on architectural efficiency, inference optimization, multimodal capabilities, and specialized expertise.

As we look to the future, the companies and researchers that will succeed will be those who understand that true scaling isn't just about making models bigger - it's about making them better, more efficient, and more adaptable to real-world needs. Anthropic is a good example for this trend, they realized that a significant usage of their Claude models is by software engineers, so they disided to optimize their Sonnett model especially for use-cases in that field, which worked remarkable well.

If you want to dive deeper into the question why scaling works at all I can recommend the new book of the podcaster Dwarkesh Patel: The Scaling Era: An Oral History of AI, 2019–2025

But keep in mind: Even the greatest minds of our time can’t predict the future 100%.

If you’ve made it this far, thanks for your valueable attention.

Mario :D

-----

Sources

- Ilya Sutskever at Lex Fridman (https://youtu.be/13CZPWmke6A?si=XYYYC0Y_1r0D-T-9)

- Dario Amodei at Lex Fridman (https://www.youtube.com/watch?v=ugvHCXCOmm4&t=9791s)

- “Attention is all you need” paper: https://arxiv.org/abs/1706.03762

- GPT-1 post: https://openai.com/index/language-unsupervised/

- GPT-2 post: https://openai.com/index/gpt-2-1-5b-release/

- GPT-3 paper: https://arxiv.org/abs/2005.14165

- GPT-4 post: https://openai.com/index/gpt-4/

- Scaling Laws paper: https://arxiv.org/abs/2001.08361

- Ilya Sutskever talk at NeurIPS 2024: https://www.youtube.com/watch?v=1yvBqasHLZs&t=465s

- Dwakresh Patel The Scaling Era: An Oral History of AI, 2019–2025: https://amzn.eu/d/aY0LlKl